Docker is one of the most popular implementations of “containerized software” which creates a cross-platform and highly scalable environment for developing and running software.

Wait, what? What is “containerized software”?

Lets start with a short example. Lets say your main development machine is a machine running on Ubuntu and you want to develop a basic webpage. Sure you can install a web server natively in your main OS but then your “setup” only works for your machine or machines with the same OS.

Containers are sort of a bare OS with (usually) just the software installed to run whatever you need for your app. So in our example we would install a NGINX webserver inside that container and build our webpage inside that container.

The main advantage here is the fact, that the whole docker eco-system is cross-platform compatible. This means, when you are done creating your app in your linux development machine you can basically copy that docker project to e.g. a MacOS or Windows machine, start docker the same as you did in your linux machine and everything (should) work.

So everything is handled via containers?

Well we are not done yet with explaining docker – there are still “images” to explain.

As described before “containers” are your implementation of your app with everything around already configured. But what if you want to build a very similar web page from before?

Instead of having to setup every container from the base up there are ready made “images” which offer you a pre-configured system where you only insert your app code and nothing else.

Images can therefore be seen as blueprints for your containers.

All available official images can be found here: https://hub.docker.com/search?q=&type=image

I want to create my first container!

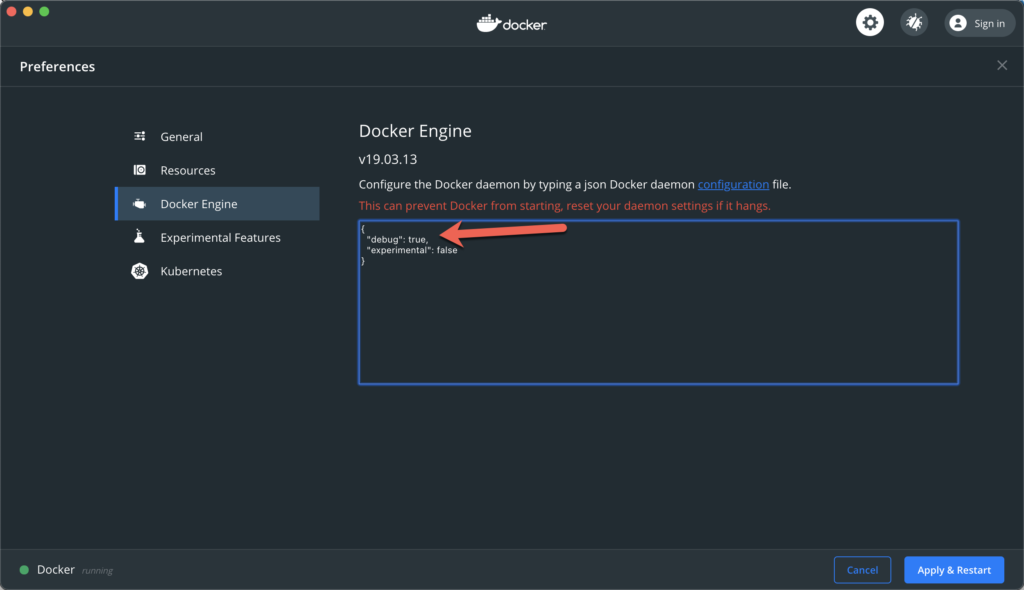

First of all check, that you have installed the latest Docker software according to https://docs.docker.com/get-docker/

Then you can just open your CLI and enter the following command

docker run --name some-nginx -v /Users/kevin/dockertest:/usr/share/nginx/html:ro -d -p 8080:80 nginx- docker: Docker CLI binary which allows you to manage everything docker related in your shell

- run: Create a new container

- –name: Give your container a human friendly name

- some-nginx: The human friendly name

- -v: Bind a local volume (folder) into the container

- /Users/kevin/dockertest:/usr/share/nginx/html:ro

- /Users/kevin/dockertest: is the absolute path on your host system you want to mount

- /usr/share/nginx/html: is the absolute path in the container you want to mount into

- ro: read-only mode (see here)

- -d: Run the container detached from the shell (see here)

- -p: Mount a specified host port to the containers internal port

- 8080: The port which will be used in the host system

- 80: The port which will be used in the container system

- nginx: The name of the image this container should be based on

Please adjust the /Users/kevin/dockertest part according to the absolute path on your local machine (just some random test folder)

If you are running on MacOS or Linux it should look something like this

-> % docker run --name some-nginx -v /Users/kevin/dockertest:/usr/share/nginx/html:ro -d -p 8080:80 nginx

1d7b787fcbe8d6ee71b9e09908a2027c48d5c4b6cd146eb26314a0dacf763f2aBasically nothing special, but now lets create a index.html in that directory

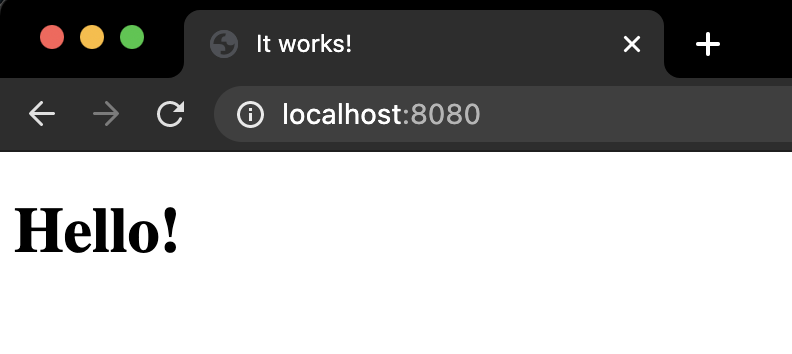

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>It works!</title>

</head>

<body>

<h1>Hello!</h1>

</body>

</html>And now open up http://localhost:8080

You should now see the HTML from above in your browser.

You can now see your running docker container either in your Docker Dashboard (which was installed when you installed Docker) or you can enter the following command:

-> % docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d6ee6e327100 nginx "/docker-entrypoint.…" 12 minutes ago Up 12 minutes 0.0.0.0:8080->80/tcp some-nginxHere you can see that a container named some-nginx was created 12 minutes ago and has a port mapping from the hosts 8080 port to the containers 80 port.

Other usefull CLI commands

- docker images: List all available images

- docker rmi %image-name%: Remove a specific image

- docker pull %image-name%: Update a specific image to the latest version

- docker ps: List all running containers

- docker ps -a: List all containers (also stopped)

- docker rm %container-name%: Remove a specific container